Many of my blog posts are focused on Azure cost reduction as this is essential to keep in mind when designing cloud solutions. Azure is a very cost-effective platform when it’s used in the right way, meaning you always have to consider the fact Azure is elastic and make sure your solutions scale up and down in an efficient way. Previously I showed how to autoscale Azure Analysis Services and Azure SQL Database. This time I show how to process Azure Analysis Services objects (models) in a cost-conscious way.

In my last blog post I showed how to Process Azure Analysis Services objects from Azure Data Factory v2 using a Logic App. Based on a simple example I showed how to link Azure Data Factory and Logic Apps using a webhook and how to communicate with the Azure Analysis Services (Azure AS) REST API from a Logic App. For this blog post I teamed up with a cloud integration specialist from Macaw, Stefan Conijn, to create an advanced Logic App that scales Azure AS up, processes your Azure AS objects and scales Azure AS down again. This is a common pattern as processing is a memory consuming operation, which often requires to scale up. The solution is cost-conscious because it waits for the process operation to finish after which Azure AS will be scaled down immediately.

A couple of things that are good to know when using this solution:

- The Azure AS API is asynchronous. The only response you will get will be a 202 (accepted) status code that tells you the message arrived successfully, but that’s it. You need to poll for progress yourself. This is actually one of the advantages of using the API for processing because the traditional methods may require long-running HTTP connections. Given the nature of HTTP, it may be more reliable to avoid long-running HTTP connections from client applications as you can read in this blog post by Microsoft.

- Logic Apps can be whitelisted on your Azure AS firewall because the outbound IP adresses of Logic Apps per Azure region are published online. This means you can keep your firewall on while processing your models using a Logic App.

- The Logic App is by default configured to process your entire model. You can change body of the request to process specific objects (tables/partitions) as desired.

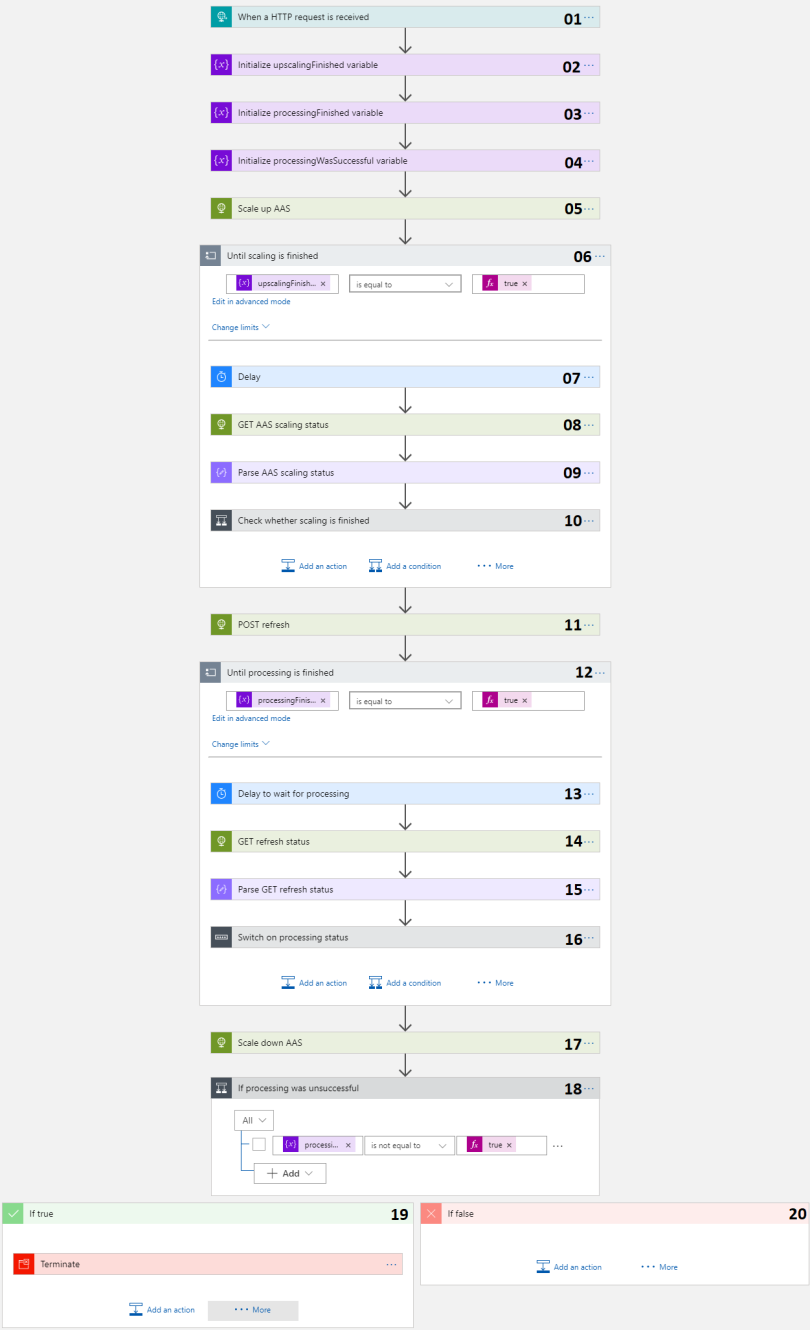

Below a screenshot of the Logic App. Every step is numbered and explained below the screenshot.

- The actual trigger for the Logic App. Can be triggered manually or via an HTTP POST request.

- A variable used to stop the ‘do until’ loop statement when upscaling of AAS is finished.

- A variable used to stop the ‘do until’ loop statement when processing of AAS is finished.

- A variable used to check if processing of AAS finished successfully.

- HTTP PATCH request to the Azure Resource Management API to scale up the AAS instance.

- ‘Do until’ loop that is used to wait for the upscaling of the AAS instance to finish.

- A delay of 30 seconds in each cycle of the ‘do until’ loop.

- HTTP GET request to the Azure Resource Management API to poll the status of the scaling process.

- Parse the result of the GET request above, so that we have easy access to the response object.

- Condition to check whether the scaling was finished. If so, set upscalingFinished variable to true so that the ‘do until’ loop is discontinued.

- HTTP POST request to the AAS REST API to trigger a refresh of the model.

- ‘Do until’ loop that is used to wait for the processing of the AAS model to finish.

- A delay of 30 seconds minute in each cycle of the ‘do until’ loop.

- HTTP GET request to the Azure Resource Management API to poll the status of the refresh process.

- Parse the result of the GET request above, so that we have easy access to the response object.

- Switch over the possible processing status values: inProgress, notStarted, succeeded or no matching value. If status equals succeeded set variables processingFinished and processingWasSuccessful to true. If status has no matching value, set variables processingFinished to true and processingWasSuccessful to false.

- HTTP PATCH request to the Azure Resource Management API to scale down the AAS instance.

- Check if processing was unsuccessful.

- If processing was unsuccessfull set the status of the Logic App to ‘failed’ by using a ‘terminate’ action.

- If processing was not unsuccessfull do nothing.

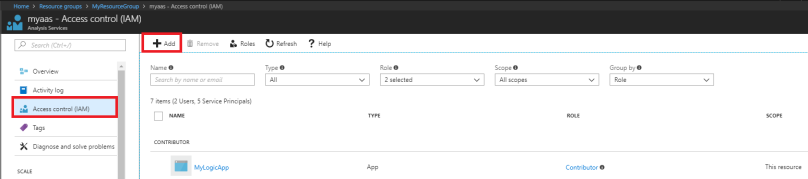

Deployment of the Logic App to your own Azure subscription is easy. First make sure you have a Service Principal (App Registration) that has the 3 permissions below.

- Azure Analysis Services Server administrator

- API Access: Azure Analysis Services (SQL Server Analysis Services Azure) – Read and Write all Models

- Contributor role on the Azure Analysis Services service in Azure

A step-by-step guide to create a service principal and grant permissions 1 and 2 to it can be found in my previous blog post. To add the service principal to the Contributor role you have to navigate to your Azure AS service in the Azure portal, click “Access control (IAM)” and then click “+ Add”. Now select the contributor Role and select your service principal by searching on it’s name:

Now go to my github repository and click on the “Deploy to Azure” button. After you have logged in to Azure to following form appears. Fill the required parameters, agree with the terms and click on Purchase. After the deployment go to the created resource group and click on the Logic App resource. Click on Edit and verify that it was deployed correctly. Run the Logic App!

Many thanks to Stefan Conijn!!

Thanks Jorg & Stefan! I’ve some more models in my solution so I’ve added a parallel path. I know… error handling could be done better 😉 https://i.imgur.com/iBotiJk.png

Keep it up! HK.

LikeLiked by 1 person

Hi Henko,

Can we parallel proces more models dynamically? E.g. a JSON parameter array in the POST body?

Gr. Ton

LikeLike

Did any of you manage to have HTTP POST commands that processes parts of a model. 1. Process Dim tables, 2. Process Fact 3. Proces recalc. I can’t seem to get it to wait for completion if i’m processing parts instead of complete database refresh

LikeLike

Hi Christian, can you try again? Stefan and I just published a new version of the Logic App. Please let us know if everything works as it should now!

Regards, Jorg

LikeLike

Hello Jorg. In my version, the Scale down AAS (step 10) starts before the POST Refresh step 9 has finished. Step 10 is starting a few seconds after step 9. My understanding is that by default the logic app will only continue to step 10 once step 9 has completed as it’s asynchronous by default?

LikeLike

Hi Matt, Stefan and I just created a new version of the Logic App that loops until processing is finished before scaling down. Can you try deploying from my github repository again? And please let us know how it went. I have just tested it against a large model and this went fine now!

Thanks for letting us know!

Jorg

LikeLike

Thanks Jorg. I was actually building my own version from scratch and using yours as a guide.

Interestingly, at step 11, for the POST refresh, I found that I needed to disable the Asynchronous Pattern in the Settings. If left enabled, step 12 gets stuck in a loop. I think this is because step 11 would return a 200 response code, but when Asynchronous is enabled it needs to see a 202 response code coming from Step 11.

I have it working now, so thanks!

LikeLike

Great to hear Matt, I’m glad it’s working now! Yeah, Stefan and I noticed this also and therefore we added the loop at step 12 to wait for the processing to finish. If you have any more feedback in the future, just let us know!

LikeLike

Hi Jorg and thanks for sharing this setup on github – not much is available for azure yet.

I installed following your steps in this article and the one before it. It scales up the AAS correctly (I can verify in the portal) and scales down correctly and it posts to the async api and gets a 202 response so all of that seems ok. It can even ask for the status of the processing in the GET request. However the process itself fails with error “0xC112001B”,

“message”: “The given credential is missing a required property. Data source kind: SQL. Authentication kind: OAuth2. Property name: AccessToken. The exception was raised by the IDbConnection interface.”,

“type”: “error”,

I have rechecked all the security on the service principal and am unsure how to continue. Any ideas?

Thanks in advance!

LikeLike

Hi,

Excellent set of guides. I’m having a similar problem to Asbjorn when connecting to the SQL servers. I get an error message saying “The credentials provided cannot be used for the SQL source”. Any hints on how to fix this

LikeLike

Add Logic Apps outbound IPs (of the region where your Logic App is running) to Azure SQL Server Firewall and see if it works. The IP addresses are here https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-limits-and-config#configuration-ip-addresses

LikeLike

Hi Ramki.

Thanks for the reply. I’ve allready added the IPs to the firewall with out any luck

LikeLike

Hi Jorg,

Is this Blog code available for download of Github where I can see what are the issues I have in the code as the process dont stop and I keep getting 409 conflict error as I am trying to process tables in a sequence with number of partitions and it refuses to wait.

LikeLike

Hi Jorg,

How can I get the live status of cube processing like a status bar saying this much percentage is completed or some estimated end time.. etc.. Please help!

Thanks in advance

LikeLike

Very Nice Article Jorg.

However I dont seem to get a Location header.. I receive this;

“statusCode”: 200,

“headers”: {

“Strict-Transport-Security”: “max-age=31536000; includeSubDomains”,

“x-ms-root-activity-id”: “37b7dda1-5ee8-4a56-868a-c98bfea617ec”,

“x-ms-current-utc-date”: “1/28/2019 5:23:10 PM”,

“X-Frame-Options”: “deny”,

“X-Content-Type-Options”: “nosniff”,

“X-XSS-Protection”: “1; mode=block”,

“Content-Security-Policy”: “script-src ‘self'”,

“Date”: “Mon, 28 Jan 2019 17:23:10 GMT”,

“Server”: “Microsoft-HTTPAPI/2.0”,

“Content-Length”: “2680”,

“Content-Type”: “application/json”

},

any idea of how I can obtain the Refresh ID?

LikeLike

Turn off async move on that request

LikeLike

On your HTTP request where you’re posting the refresh, if you hit the ellipsis top right it opens a settings panel. There is the option to make the request synchronous which resolved the issue where you don’t receive the location in the header response.

LikeLike

Hi Jorg,

Thanks for the article, it simplified significantly my life=)

From your experience what is the best way to debug aas refresh logic app? I’ve recently noticed that refresh fails and finishes successfully almost randomly. It just fails after 10 minutes of processing. Manual refresh always goes well, but being called from logic app it’s unpredictable (and this is not acceptable in live). Could you please advise, how to debug the app?

LikeLike

Check the until loop it has a max number of loops set to 60… if it loops more than 60 times then it will fail.

LikeLike

Hi Guys,

Thank you for ther article its great!!

In my HTTP Response i dont find Refresh ID, could you help to find?

Thank you so much!!

LikeLike

Thanks for creating the logic app it’s worked perfectly for me.

I managed to add logic to your template to push a sync to the replicas and wait until that is successful.

I’m wondering if you know a way to Suspend and Resume an instance using the logic app.

I’ve tried a post to this API

https://management.azure.com/subscriptions/xxx/resourceGroups/RGPROD/providers/Microsoft.AnalysisServices/servers/prodssas02/suspend?api-version=2017-08-01

But it error’s on POST, played around a little with the auth url with no success.

Also is there a way from logic app to add and remove the query replicas

I’ve looked around everywhere and I’d prefer not to use powershell runbook if possible as the scheduling is more granular with logic app.

LikeLike

Hi Jorg,

Thanks for the article, it helped me a lot, I have a question my fact table contains multiple partitions one per month I have 2 years of history something like 1500 million records, the question is can I only process the last two partitions of the year of my fact table.

Thank you so much!!

LikeLike

Great stuff Jorg & Stefan! Much appreciated.

I was wondering what is the preferred way to manage partitions dynamically?

Many thanks.

LikeLike

Hi Jorg,

My logic is scaling down before it could complete processing, can any one help me with that??

LikeLike

Hi, I would like to know if there a way to process on prem analysis service from Azure using a logic app, or is there any other way to process on prem Tabular model from azure

LikeLike

Hi all, I am getting an error while i am trying to process the azure analysis server using logic app.

Error:-“code”: “0xC112001B”,

“message”: “Login failed for user ‘admin’.. The exception was raised by the IDbConnection interface.”,

“type”: “error”,

LikeLike