In this blog post I will show how you can orchestrate processing of your Azure Analysis Services objects from Azure Data Factory v2. This makes it possible to process an Analysis Services model right after your Azure Data Factory ETL process finishes, a common scenario.

I will guide you through creating a Logic App that communicates with the Azure Analysis Services REST API and show how you can trigger it’s execution using a webhook from Azure Data Factory v2. The whole process will be executed using an Azure Active Directory App registration that has been granted access to process Azure Analysis Services objects via it’s REST API.

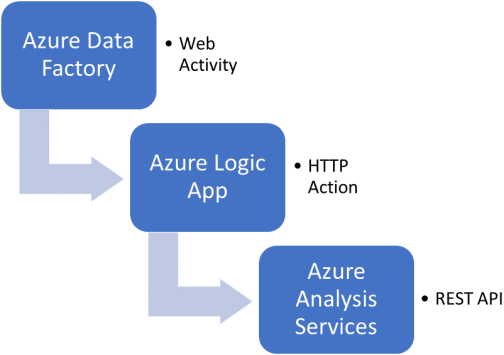

The following figure gives an overview of the process:

Now let me guide you through the following steps:

- Create App Registration (application service principal) in Azure Active Directory

- Grant App Registration permissions to process your Azure Analysis Services model

- Create the Logic App that will communicate with the Azure Analysis Services REST API to process a model

- Create an Azure Data Factory pipeline with a Web Activity that will trigger the execution of the Logic App

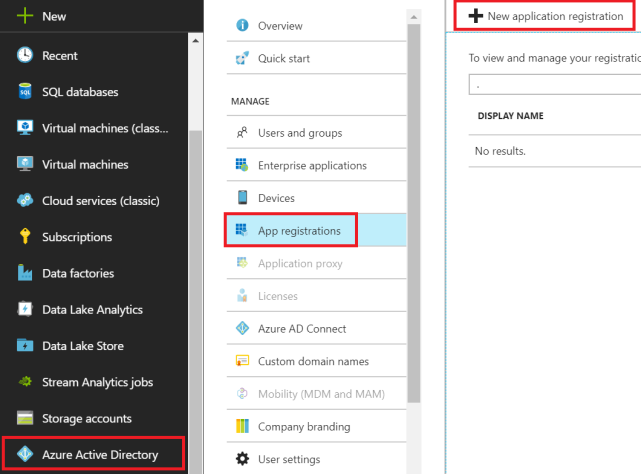

Create App Registration (application service principal) in Azure Active Directory

Navigate to the Azure Active Directory page. On the Overview page, click “App registrations” followed by “+ New application registration”.

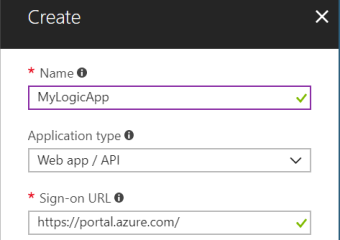

Enter a name for your App registration, select Web App / API for application type and enter a dummy Sign-on URL as we don’t intend to use Web Authorization.

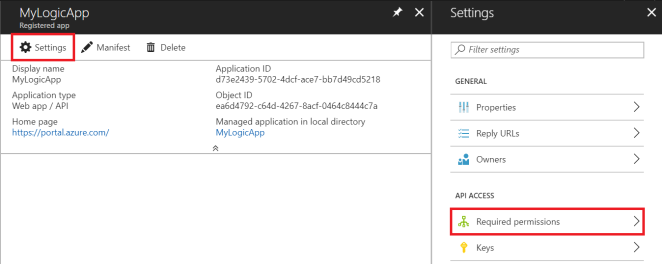

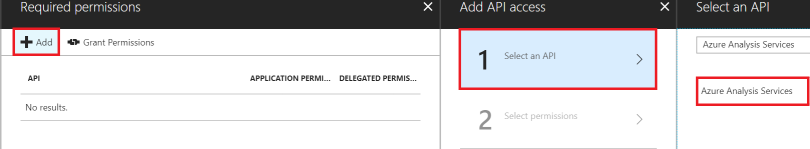

In the Settings section for your App registration, click Required permissions.

In Select an API, type “Azure Analysis Services” into the search box. Then select “Azure Analysis Services”.

In Select permissions, select “Read and Write all Models” and then click the Select button. Then click Done to add the permissions. It may take a few minutes to propagate.

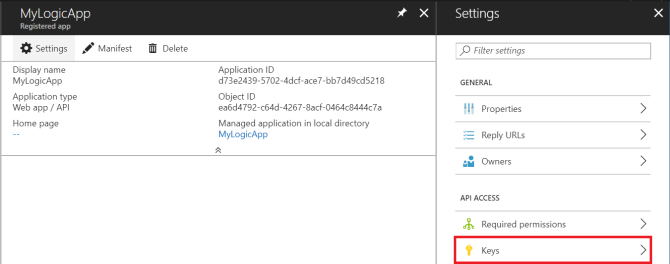

Back in the Settings section for your App registration, now click Keys.

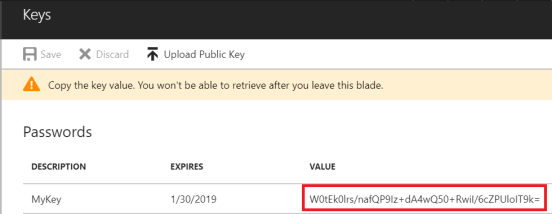

Enter a key description, choose a duration and click Save. A new key will be created. Make sure to copy and save it as you won’t be able to retrieve it after you leave this blade.

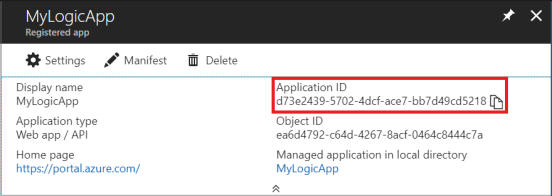

The Application Registration is ready. Take note of the Application ID in the settings section for your App registration as you need it later when configuring the Logic App.

Azure Active Directory ID (Tenant ID)

When you will configure your Logic App to use the App Registration we just created, you also need to provide a value for the Tenant property. This reflects to the Azure Active Directory ID in which the App Registration is created.

Navigate to the Azure portal, go to the Azure Active Directory page and click Properties. Now take note of the “Directory ID”.

Grant App Registration permissions to process your Azure Analysis Services model

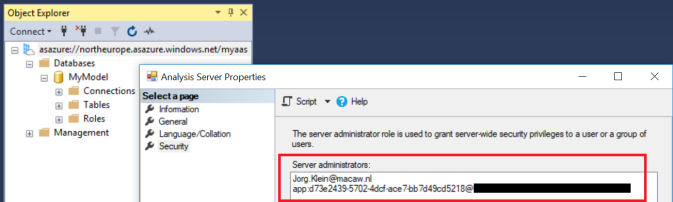

Connect to your Azure Analysis Services server using SQL Server Management Studio. To process models using the API, the App Registration needs Server administrator permissions.

Open the Analysis Services Server Properties, click Security and click Add. You can add the App Registration as a manual entry using the Application ID (app guid) and the Azure Active Directory ID (tenant guid) that you saved before. Use the following syntax:

app:<app guid>@<tenant guid>

Create the Logic App

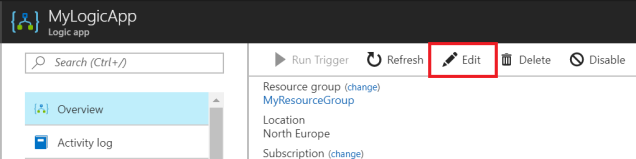

Create a new Logic App from the Azure portal Marketplace and click Edit.

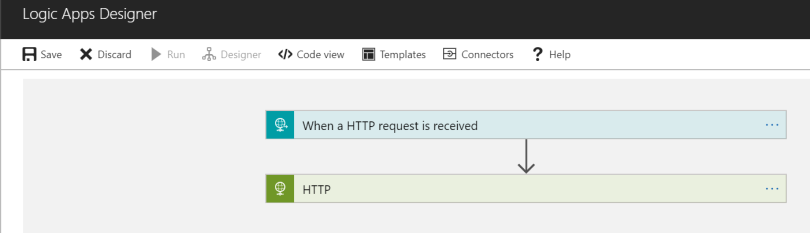

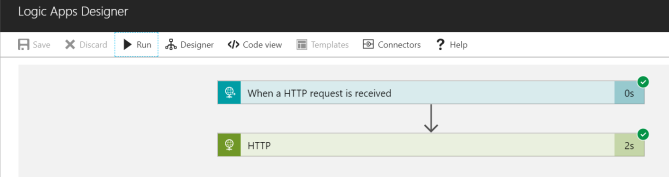

Pick the “When a HTTP request is received” trigger from the list of most commonly used triggers.

Click “+ New step”, “Add an action” and choose the “HTTP – HTTP” action.

Your Logic App Designer now contains all the necessary components to be triggered from Azure Data Factory and to communicate with the Analysis Services REST API.

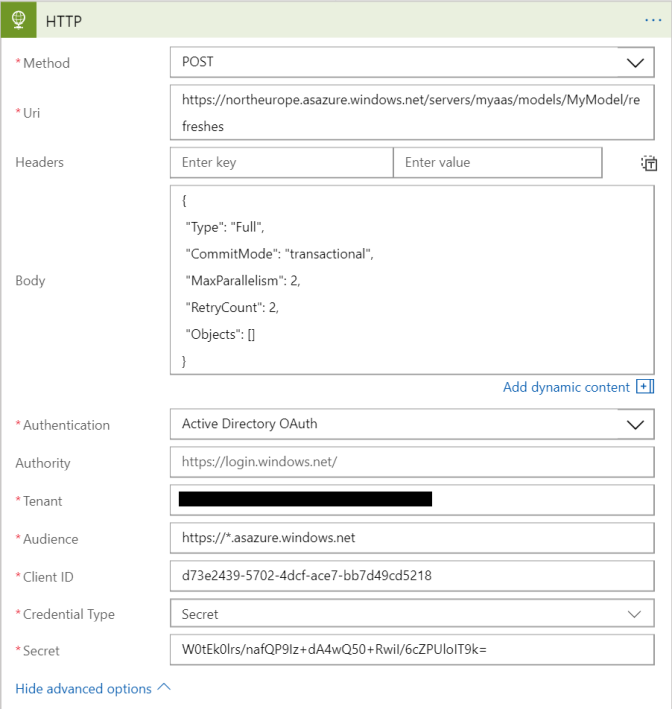

We now need to configure the HTTP action. You can find background information regarding the Uri (Base URL) and Body in the REST API for Azure Analysis Services documentation on the Analysis Services Team Blog.

- Method: POST

- Uri: https://northeurope.asazure.windows.net/servers/myaas/models/MyModel/refreshes

The base URL for the Azure Analysis Services REST API to process (refresh) models follows the following format:

https:// <rollout>.asazure.windows.net/servers/<serverName>/models/<resource>/refreshesIn this URL replace the following values with parts of your Azure Analysis Services Server Name property and model name. In this example my server name was:

asazure://northeurope.asazure.windows.net/myaas- <rollout>: northeurope

- <serverName>: myaas

- <resource>: MyModel (model name)

- Body: Read the REST API documentation on the Analysis Services Team Blog under “POST /refreshes” for more information. In the example below I will refresh my entire database (model) by a process Type Full, but processing specific tables or partitions (objects) is also possible.

{ "Type": "Full", "CommitMode": "transactional", "MaxParallelism": 2, "RetryCount": 2, "Objects": [] } - Authentication: Active Directory OAuth

- Tenant: Use the Azure Active Directory ID that you saved before

- Audience: https://*.asazure.windows.net

- Client ID: Use the App Registration Application ID that you saved before

- Credential Type: Secret

- Secret: Use the App Registration Key that you saved before

Your Logic App HTTP Action should now look like this:

Test if everything works as expected by running your Logic App. You can fold the HTTP Action open to see the REST API response code and message.

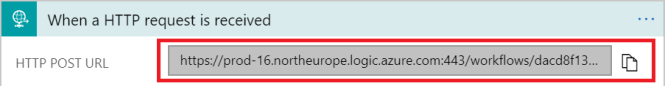

The Logic App works and is ready to get executed from Azure Data Factory. Open the “When a HTTP request is received” trigger and take note of the HTTP POST URL.

Create the Azure Data Factory

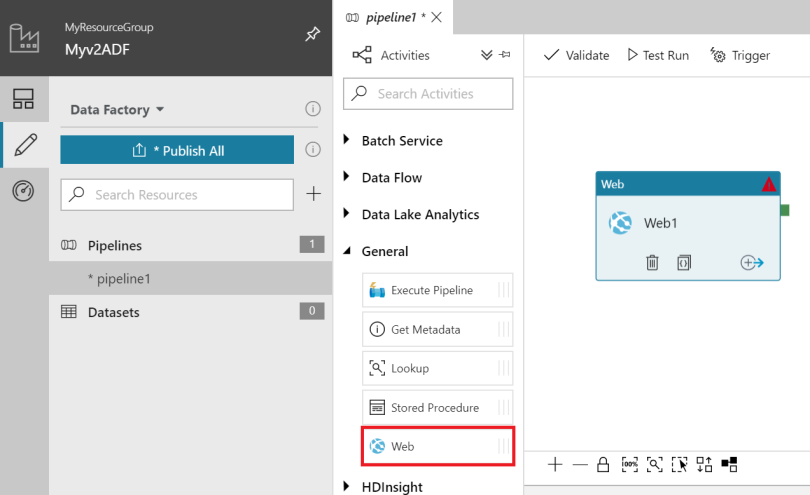

Create a new Azure Data Factory v2 from the Azure portal Marketplace. Click on the “Author & Monitor“ tile in your provisioned v2 data factory blade to open the visual tools for ADF v2.

In the visual tools, create a new pipeline and drag and drop a Web Activity on the pane.

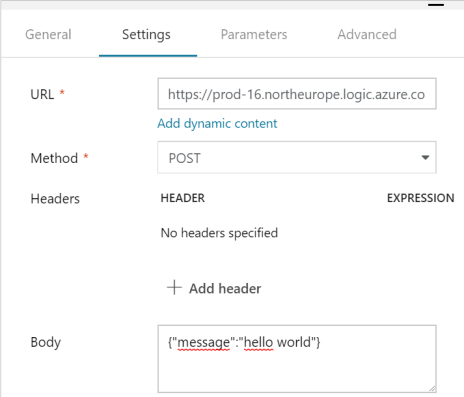

Configuring the Web Activity is easy. Open the Settings tab and specifiy the following property values:

- URL: Use the HTTP POST URL of the Logic App that you saved before.

- Method: POST

- Body: A body is required but not needed for our Logic App to function the way we want, therefore use the Sample:

{"message":"hello world"}

Your Web Activity Settings should now look like this:

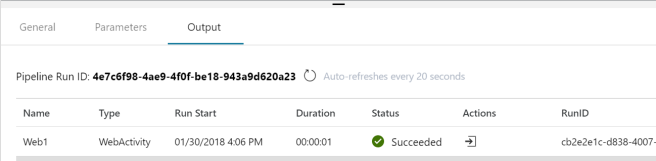

Execute a Test Run and check the Output tab to see if everything works as expected.

Now open your Logic App and check if it ran succesfully.

Your Analysis Services model has started processing from Azure Data Factory! In a next blog post I plan to show how you can enhance the Logic App with a workflow that will poll the Analysis Services REST API to check when the process operation is completed.

Update: I have created a more advanced version of this Logic App, read about it here: Process Azure Analysis Services objects using a Logic App part 2

Hi,

I ran the steps above and when I run my data factory pipeline I get following error in MyLogicApp

{

“code”: “Conflict”,

“subCode”: 1,

“message”: “Internal error AsyncOperationConflictException_Message1.AliTest”,

“timeStamp”: “2018-02-06T16:26:49.4094919Z”,

“httpStatusCode”: 409,

“details”: [

{

“code”: “RootActivityId”,

“message”: “3798339c-6cdc-4f75-9b60-7daad4d8fc9b”

},

{

“code”: “Param1”,

“message”: “AliTest”

LikeLike

Hi Jorg,

Firstly thanks for this post and your reply. I have not been able to process the cube thus far as it always gives me this same error. We tried running it locally from Logic apps and calling it from data factory and in both cases the job doesn’t process. Is there some setting on the SSAS side which we need to modify ?

LikeLike

Hi Ali, according to the documentation 409 means there is a refresh operation running already: “Only one refresh operation is accepted at a time for a model. If there is a current running refresh operation and another is submitted, the 409 Conflict HTTP status code will be returned.”. See: https://blogs.msdn.microsoft.com/analysisservices/2017/12/20/asynchronous-refresh-with-the-rest-api-for-azure-analysis-services/

LikeLike

Sorry I missed that. I kicked the job off again from data factory and this time is showing me the job succeeded but the cube doesn’t process. Instead in the output section I get the below message:

“startTime”: “2018-02-09T16:15:25.7689228Z”,

“type”: “full”,

“status”: “notStarted”,

“currentRefreshType”: “full”,

“objects”: [

{

“status”: “notStarted”

}

I checked the cube to see if it ran but it doesn’t show time changed of last process.

LikeLike

My guess is that the message from data factory gets to the MyLogicApp. Because the MyLogicApp says it is successful. and it just doesn’t send a message to kick it off on SSAS Azure side? Can you advise which layer the issue might be or how to trouble shoot for why the cube isn’t starting even though MyLogicApp is saying it successful?

LikeLike

Ok. I think the issue was that the “database”: “AliTest” command wasn’t kicking off the database but if I did individual tables it did process if specified per table in the ‘Object’ section of the code. Thanks.

LikeLiked by 1 person

Hey, nice blog. I wonder why you use the logic app though? You could pass all the relevant information into your ADF as parameters and call the Azure Analysis services apis using the web activity. That way you could have one ADF pipeline and toggle environments with parameters. Just a thought.

LikeLike

Hi Matt,

Thank you.

That’s not possible. The Azure AS REST API requires OAuth, which isn’t supported in ADF’s Web Activity: https://docs.microsoft.com/en-us/azure/data-factory/control-flow-web-activity#authentication

Next to that Logic Apps offer more capabilities to build advanced workflows, which is something I plan write a blog post about soon.

But to be honest a Logic App is way better in communicating with API’s then ADF should ever be. If OAuth would be there I would still prefer to use a Logic App. In my opinion ADF should be a orchestrator that integrates with all other Azure PaaS compute services for activity dispatches and should not try to replace what other services are already very good at.

Regards,

Jorg

LikeLiked by 1 person

Excellent article, I do appreciate people like yourself carefully documenting these processes. Just as a follow up, ADF now supports MSI authentication through the web task. It might be argued (especially by me 🙂 ) that ADF calling web services directly to orchestrate things is a great example of orchestration. In a data-centric system, it might make more sense to limit the number of platforms used get a job done. i.e. Why add logic apps in to the technology mix if you can just use ADF to get the job done. Thanks again for the article which has saved me a lot of time!

LikeLike

If you are already connecting to a Tabular model’s service, the body of the request can be simplified to: {“type”: “full”, “maxParallelism”: 2}. You can also easily modify the trigger to accept parameters so that you can create one Logic App that can refresh lots of models. “https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-http-endpoint”

LikeLiked by 1 person

Hi Kevin,

Thanks for your reply!

Simplifying the body is a good suggestion and modifying the trigger to accept parameters to refresh multiple different models is indeed one of the many possible scenarios. I plan to write more blog posts on this subject and will definitely mention your suggestion. Thanks!

LikeLike

Can you please elaborate how we can process multiple Azure analysis service models via Logic App..

LikeLike

what if we are not using AAS, but AS on premise? I need to refresh AS on premise after an ADF data load for our warehouse.

LikeLike

Hi Mike,

I would look into using Azure Automation Hybrid Runbook Workers for this scenario. From ADF you can trigger a Azure Automation runbook using a webhook just like I did for the Logic App in this blog post. Hybrid Runbook Workers can execute PowerShell scripts that access on-premises resources.

https://docs.microsoft.com/en-us/azure/automation/automation-hybrid-runbook-worker

https://docs.microsoft.com/en-us/azure/automation/automation-hrw-run-runbooks

Let me know if this works for you!

LikeLike

Hi, I do not find “SQL Server Analysis Services” when I search this in the text box. May I know how do I add this in the available services list?

LikeLike

Hi Unni,

You are right, the name has been changed. You have to search for “Azure Analysis Services” now. I will modify this, thanks!

LikeLike

Hi,

We have followed this article but ends up with huge impact in our tenant. After granting permission to the APP, Azure Analysis services is down which created a impact in our tenant. Can you please clarify below queries.

1. Granting permission to a AAD app will create any impact in other models in Azure Analysis Service?

2. How Azure AD permission works?

3. can we have more than one AAD app for Azure Analysis Service models.

Thanks,

K Senthilrajan

LikeLike

Hi, I don’t recognize your issues. In your article I see you not only granted the required Azure Analysis Services API permissions like described in this blog post, but also Windows AD API permissions. I would like to understand your case: why did you do this and could this be the cause of the problems? Did you get any background information from Microsoft support?

LikeLike

We were simply told that your AAD app blocked all permissions to Azure Analysis Service. Really not understood how it happened? As well as our development team does not have admin rights to accept the consents, and the AAD app is not shared with any other team. Any idea?

LikeLike

Excellent blog. Thanks!

LikeLiked by 1 person

Really great post!

Would you recommend this scenario also when refreshing a Power BI dataset. Or should instead Azure automation be considered + a Powershell script that refreshes the API?

Lastly I saw your comment around oAuth and why logicapps is needed. Where would I be able to find whether Oauth is needed to refresh Power BI datasets?

LikeLike

Excellent Post! Is it possible to process selective table from Azure analysis Service tabular model? can please you share the code

LikeLike

Very cool

I Added a Run Untill loop waiting for completion of refresh by using a GET on REFRESHES

LikeLike

Hi, I have created an SPN and given required permission. But when I tried to execute the logic app, I get an unauthorized error. Any idea? I have added the app id as the server admin.

LikeLiked by 1 person

I also get this error. Any help?

LikeLike

I got my logic app to run by whitelisting the IP addresses for LogicAps in Azure Analysis Services’s firewall: https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-limits-and-config#configuration-ip-addresses

LikeLiked by 1 person

Is there anyway to keep the web activity to wait until the cube has finished processing before it moves onto the next step in the pipeline?

LikeLike

Hi Jorg,

I am not able to user to Analysis Server manually. Can you please let me know the exact steps to add the user manually ?

app:@

LikeLike

OMG. I see this is post is one year old, but I seriously hope that it is easier than this now. Having to create Logic Apps so Data Factory can call those seems like a massive flaw. Data Factory should allow authentication and processing of Azure AS without any sort of intermediate workload. And I mean Azure DF, not SSIS in Azure DF.

LikeLike

I have a model With space in the name e.g. – “My Model”

And when i put this name in the URL it says invalid URI while saving the Logic app.

Is there any way to handle spaces

LikeLike

Hi Jorg,

Great Post! I’m trying to figure out how to issue a Sync command after my processing is complete. The documentation doesn’t go into detail about what information to include in the body of the POST sync command. Have you tried this before and know what the syntax looks like?

LikeLike

Hi Jorg,

Thanks for article .I am able to refresh Tabular cube after following steps.

But we don’t want secret to lie directly in Logicapp .I tried using Managed Identity option for authenticating Azure analysis services in HTTP action.It is not working.Getting an error saying unauthorized.

Tried multiple ways of providing the appid shown for Logicapp as Analysis servicesadmins but not use.

Please guide me the way to use Managed Identity Authentication Option

LikeLike

Hi , i have to get data from a table in Azure Analysis Service, I’ll have to make a get call for it . Can i know the exact syntax for the URI : https:/XXX/servers/YYY/models/ZZZ/table=Account

How do i refer the Account table in AAS. The above command shows error 404 . ignore the XXX, YYY,ZZZ encryption.

LikeLike

Hi Jorg, I ran the step above and all works properly, and for this very thanks.

But now I have another issue.

Using the same approach above, I would like to configure the body of the second activity of the logic app to update the roles of the Analysis Service model.

The query that I would like to perform is something like this:

{

“createOrReplace”: {

“object”: {

“database”: “ddtest”,

“role”: “Readers”

},

“role”: {

“name”: “Readers”,

“modelPermission”: “read”,

“members”: [

{

“memberName”: “user@domain.com”,

“identityProvider”: “AzureAD”

}

]

}

}

}

I have made several attempts but none have succeeded, and also I have not found documentation about it.

Do you know if it’s possible to do this through logic app with the same REST API?

Or maybe you know if there is a dedicated REST API to do this?

Any suggestion is appreciated

Thanks

LikeLike

Thanks for this useful article.

I have a general question and I am hoping you can help me out.

If you need to have a scheduled task that retrieves a dataset containing around 2000 rows from a SQL server hosted on a Azure VW, and you need to process the data and save it in Azure SQL Database, which Azure service you would recommend? Azure Logic App or Data Factory?

Thanks a lot.

LikeLike